Proxmox 4Gbit/s HA Networking with two Dual-Port NICs and VLAN-enabled Bonding to distinct Switches

Lately we've setup a new Proxmox 4.4 Server. For this we upgraded our former ESXi 5.5 Host with more RAM, a RAID-10 Array (from RAID-1 w. Hot-Spare) and two Dual-Port 1Gbit/s NICs (therefore 4x 1Gbit/s in 2 PCIe Cards with Intel I350 Chipset) from two Single-Port Onboard Broadcom NICs.

Every Server is connected with at least one NIC to one of the two HP ProCurve Switches in one of our Racks. The Switches are not stacked so a Server is only active with one NIC at a time and there is a Linux Bonding running over both NICs on active-backup mode (mode 1).

For the upgrade we wanted to have more bandwith while still preserving High-Availablility.

Examining the best NIC Setup

For a first overview, we have the following NICs available in the server:

- 2x 1Gbit/s Onboard Broadcom NICs (with both Ports shared with iDRAC Express)

- 2x 1Gbit/s PCIe x4 Intel I350 Network Card

- 2x 1Gbit/s PCIe x4 Intel I350 Network Card

We knew we wanted to have at least 2Gbit/s available all the time but they should be redundant in case a cable fails. Also we don't wanted to use the internal NICs anymore as they're shared with the internal iDRAC and to have only one kind of hardware being used (so Intel-only).

Out first idea was to use 2 LACP-Bondings from each Dual-Port NIC to each switch and use a third active-backup bond over the 2 LACP-Bonds. Sadly one cannot bond bonds in Linux (Why not, actually? If you know it, i'd like to see an answer in the comments!).

This idea being abandoned, we've gone through all the available bonding modes and while reading through mode 5 our eyes started to shine while they shined brighter when we reached mode 6. This one actually seemed to be the choice to go for us. It does not need any special switch configuration and the switches can be totally separate from each other despite having to be configured the same from a VLAN perspective but that's easy on a managed Layer 2 switch.

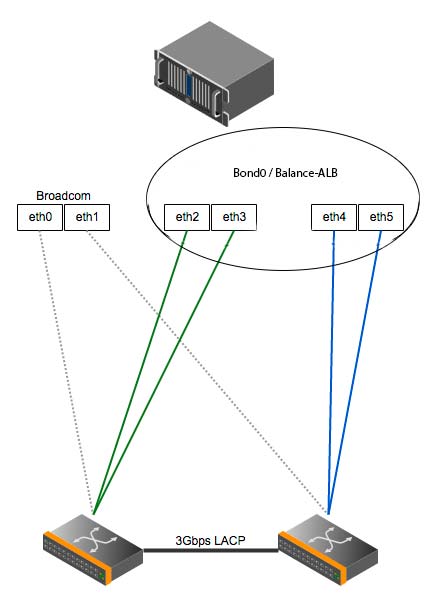

So our final network diagram with bonding mode 6 looked as follows:

As you can see, we now have one Linux Bonding over both cards to both switches using a Bond with Mode 6.

The actual Proxmox configuration was completely done in the /etc/network/interfaces file and looks like this now:

auto lo

iface lo inet loopback

iface eth2 inet manual

bond-master bond0

iface eth3 inet manual

bond-master bond0

iface eth4 inet manual

bond-master bond0

iface eth5 inet manual

bond-master bond0

auto bond0

iface bond0 inet static

address <Network address for Proxmox Management GUI/SSH>

netmask 255.255.255.0

gateway <Network Gateway>

slaves eth2 eth3 eth4 eth5

bond-miimon 100

bond-mode 6

bond-downdelay 200

bond-updelay 200

Going further with VLANs

Now that we have a working network setup we can create VLANs and then create the vmbr devices on top which can be used by the Virtual-Machines later.

For more clarification of the configuration shown here you have to know that we configured the VLANs on the switches as follows. On each switch there are several VLANs

* Management VLAN (VLAN 100)

* Production VLAN (VLAN 200)

* and some more VLANs ill cut off here...

Now on each switch the Management VLAN is configured untagged, so we can easily assign an IP for the Proxmox Management GUI and SSH to the bond0. After that all other VLANs (i only added one more, so the config is short enough to get the idea) are tagged.

The config now continues like this:

auto bond0.200

iface bond0.200 inet manual

vlan_raw_device bond0

post-up bond0

We added another virtual interface with VLAN Tag 200 and assigned bond0 as the raw device. This virtual interface can now get VLAN 200-tagged packages from the bond0 raw device. You can add more tagged VLANs according to your needs.

After that we have to create vmbr devices for each "VLAN device" so we can use it for actual Virtual Machines.

auto vmbr200

iface vmbr200 inet manual

bridge_ports bond0.200

bridge_stp off

bridge_fd 0

You can name vmbr200 like you want. We added the VLAN tag so it's obvious for which VLAN we will attach the new Virtual Machine to. The actual VM doesn't have to support VLANs as it will communicate without any VLAN tag and Proxmox will completely take care of this part.

Additional Informations

We actually left out eth0 and eth1 since those NICs are attached to the Onboard Broadcom NIC and we don't want to use them in our Proxmox server.

Also you have to take care that you take the right ethX devices in your configuration later. You can check that by comparing the MAC-addresses for example. In our case the NICs where really sorted correctly from the start which made it a bit easier.

You may also want to reboot once after finishing the configuration to make sure the config works correctly. Our HA-Tests also showed that the config works really great. Depending on if a connection is currently up using a slave interface which you cut this moment it needs none to 3 pings in our tests for the connection being back and while using a TCP-based Test like SSH, the connection doesn't even go down.

Conclusion

This guide should also work for older and newer Proxmox instances as well as any other Linux distribution. While Proxmox 5.0 is out now, we've seen that the underlying Debian Stretch now uses another naming for NIC's starting with eno1, eno2 and so on. The setup above should also work quite well with the new NIC naming - so for the above example it should be ens3 till ens6 since those are added cards and not onboard (eno0/1 should be the Onboard Broadcom NICs). You have to try that out first, what a naming they got.